🧠 New Blog Post

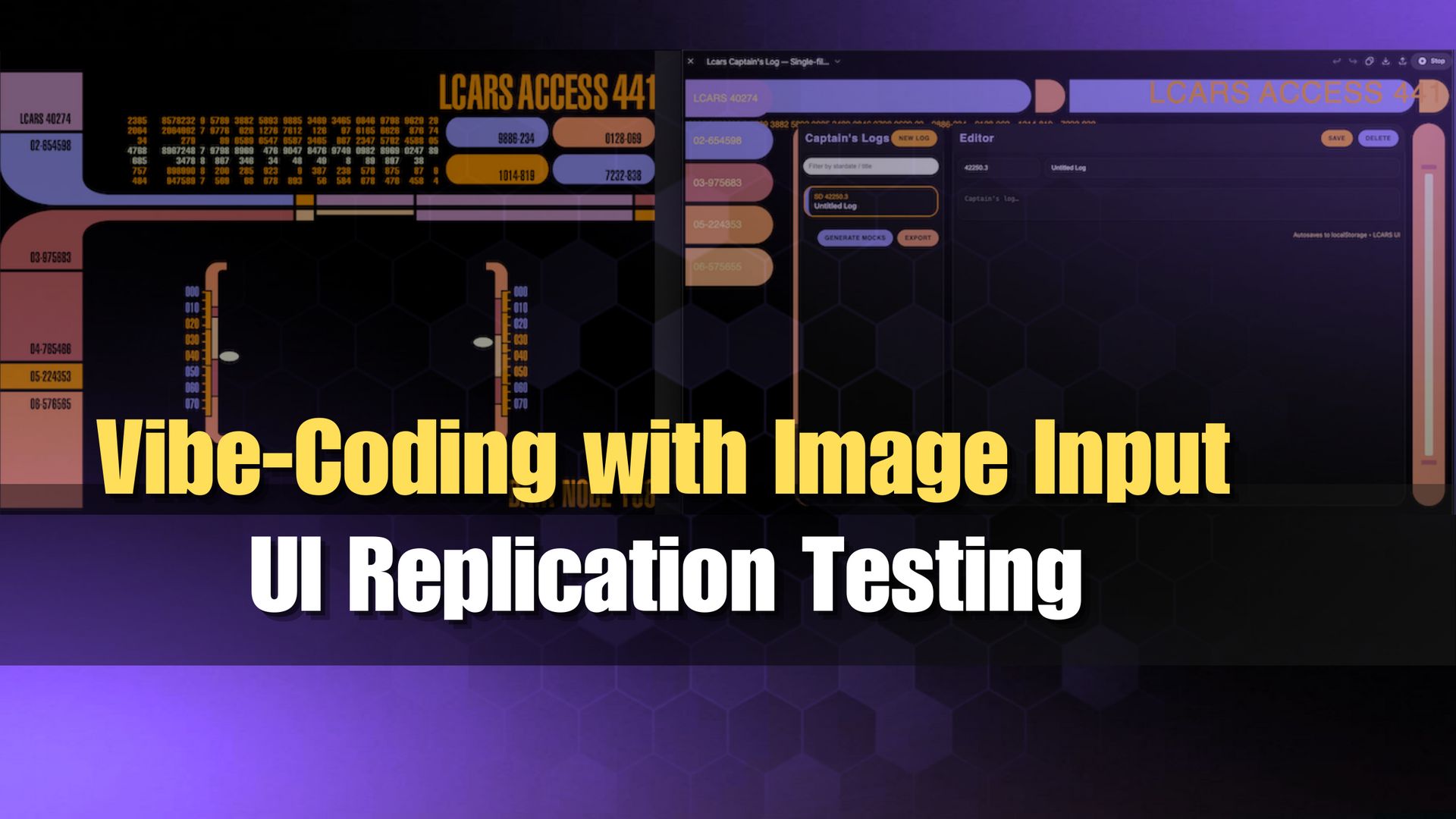

Which vibe coding tool is best at replicating a UI from an image? This weekend I tested Lovable, Bolt, V0, ChatGPT 4o and 5, Claude Sonnet 4, and Claude Opus 4.1, using the same prompt and reference image, to see which one matched the image the best in one-shot.

I wasn’t too surprised by the winner, but the worst performers were not what I expected. But the biggest thing I learned from this experiment wasn’t about which model or tool is better at reproducing the UI from an image.

🤖 AI & Dev Tools

AI search tools like Perplexity are great for research, but the sources they find aren’t always the most reliable. If you’re just looking for stuff like product reviews or DIY repair tips then results from Reddit may be a good source. But what about actual research, for more serious uses like writing a thesis, or market research to guide business decisions?

Recently I found consensus.app, a search engine for academic papers that cites and links to real scientific studies. I asked it about ‘low-code adoption by enterprises’, and it generated a surprisingly detailed report with twenty different sources! And all of the sources are legitimate research papers from respected organizations like IEEE and the Journal of Applied Sciences. Check it out next time you need some facts to quote in that next slide deck or report.

💡Tips & Tricks

SPOILER ALERT! If you haven’t read the new blog post above about replicating a UI with vibe coding tools, well, this tip sums up the most important part! Normally I have a separate tip, but this one was so effective that I thought it deserved both the long-form post, and separate tip:

TIP: 🖼️ > 📄 A Picture is Worth a Thousand Words!

After testing seven different vibe coding tools and models, comparing image-only vs image+text, I found that all models and tools produced more accurate results when prompted with ONLY the image, and a simple prompt saying ‘make it look like this’.

All the models performed worse when given a detailed text description of the desired interface, in addition to the image.

To me this shows that 1) they all have the ability to reproduce a UI from image alone, and 2) any attempt to enhance that initial prompt with text is likely to make the results worse.

If you have a picture of the UI you want (or can create one), provide that— with minimal text prompting, for the first prompt. You can always follow up with more text prompting after the initial results.

📺 Video Content

Last month at DevRelCon, Kevin Blanco and I set up a whole recording studio and scheduled interviews with DevRel from a bunch of different companies. We basically recorded a whole season of podcasts in a single day! But there was one interview that wasn’t planned at all.

Harish Mukhami, CEO of GibsonAI, had stopped by to meet Kevin and I in person, since we had met recently over Zoom and just happened to be where he lives in NY the following week. We started chatting about their platform and how they are using agents to build backends, and it sounded like great content for another interview. So I asked him if we could record, since we already had everything set up.

It ended up being one of the most interesting interviews of the series! What really stood out to me is their approach to building for agents as the end user. They consider agents as another user persona, and build their platform and docs with agent access in mind.

👥 Community Picks

This week’s community pick comes from an old friend and coworker from my early days at Appsmith, Confidence Okoghenun, (@megaconfidence on YouTube). In this video, Confidence demos a voice agent using Twilio, Stripe, Cloudflare workers, and OpenAI, that you can call and chat with on the phone to place orders!

📚 From the Archives

AI is great at classifying text, but letting AI scan your text isn’t always an option. There are a lot of reasons companies either can’t or won’t use AI or let it access their data. Between security and privacy regulations at the company and government level, lack of trust due to hallucinations, and certain locations and industries without outside internet access, there are a wide range of cases where text analysis is needed, but AI can not be used.

Text analysis has been around a lot longer than LLMs though, and there are plenty of libraries that can classify text, detect sentiment, and measure all kinds of other language properties without relying on AI. In this post, I explore several different JavaScript libraries and techniques for text analysis, and walk through the logic and math involved with each example.

If you enjoy this newsletter, please share it with others in your network. Got a question or comment? Login at news.greenflux.us to share your thoughts.